Matthew Leitch, educator, consultant, researcher

Real Economics and sustainability

Psychology and science generally

OTHER MATERIALWorking In Uncertainty

Dynamic Management

Version 1.2 first published 13 November 2002.

Why?

Have you noticed that things at work don't often work out the way you expected or wanted originally, and the goalposts keep moving? These days most people have experienced this. Budgetary control seems increasingly inadequate. Scorecards and targets are the new hope but most organisations should already be noticing that they have many of the same flaws as bad old budgeting. Is it hopeless? Should we embrace ‘complexity theory’ and accept chaotic muddle as natural and healthy?

I don't think so. There is a way to manage under great uncertainty and complexity. It breaks rules you may be so familiar with you don't even know you believe them, but there is a way.

As you read on there will be times when problems come up in your mind – objections – reasons why what I'm explaining couldn't work. Trust me and read on. I've spent years working out solutions to those problems and I've solved many problems most people haven't even thought of. If I've missed something you won't find it that easily. And even if you don't agree with everything I'm certain you'll find something here that is new and useful to you.

Please, read on and enjoy!

Definition and introduction

Dynamic Management is simply management that expects the goal system (i.e. goals and the way alternative futures are valued) to change, though not necessarily in a predictable way. It ‘expects’ change in both senses:

change to the goal system is anticipated, which in most real-life situations is a correct assumption; and

change to the goal system is seen as desirable, because goal systems that are static for a long time suggest that nothing more has been learned.

Dynamic Management is applicable to both operations and projects, since changing goal systems occur in both. It is also applicable at every level, from a large organisation down to individuals within it, and individuals in their private lives.

Here are some examples of changing goal systems:

Example: Changed reason for existence. Franklin D Roosevelt suffered a crippling condition as a result of polio. In 1938, at the height of his own popularity and the seriousness of the polio problem, he founded the National Foundation for Infantile Paralysis to fight polio. The organisation quickly grew into a successful fund raiser. In less than 20 years polio had largely been defeated thanks to the Salk and Sabine vaccines. The Foundation was left with a choice: find a new goal or close down. They decided to find a new goal and concentrate on ‘other crippling diseases’ with a particular emphasis on birth defects.

Example: Project level change. In the late 1990s British Telecommunications (BT) began a project to create an internal market by which its divisions could trade with each other. The idea was to give senior managers responsibility for profit making organisations and give them more meaningful management accounts, as well as encourage more parts of the vast company to behave in a commercial way and be competitive. As the telecom gold rush reached its height, a reorganisation was announced which involved taking this idea much more seriously. Now the intention was to create separable businesses that could be floated separately though still as part of the group, making the true value of the BT group clearer to investors and analysts in the city. As separation gathered momentum it became clear that just offering a minority of the shares in its most exciting divisions was not going to be enough. Investors wanted completely separate businesses to be created. The mobile communications division was floated and demerged, with other divisions making preparations. Then the telecom bubble burst and BT's top team changed. Further flotations were abandoned and divisions were encouraged to act together instead of straining to go their separate ways.

Example: A life change. Most people find that becoming a parent is a life changing event, upturning priorities and plans dramatically. Some people adapt faster than others. In my own case, I went from working to have a career for myself to working to get money for my family in about a month.

This is different

Of course in most late twentieth century management thinking goals change, but this is as a result of strategy formation or some other kind of cyclical planning process, not a routine part of day to day, month to month management. Changing goals is thought of as an upheaval, a disruptive, emotional, heavyweight activity restricted only to a senior elite in the corporate hierarchy. In contrast, Dynamic Management makes learning and changing goals a frequent occurrence, carried out at any level in an organisation, on receipt of relevant news rather than because another year has ended.

The main concerns of managers using Dynamic Management include updating goals and forecasts to reflect what is being learned, and keeping plans up to date with the latest goals and forecasts.

While this is common sense, the vast body of management literature and stated practice (though less often actual behaviour) makes the assumption that, once determined, objectives remain fixed. The main concern is to adjust plans to reduce the difference between actual outcomes and original plans and expectations. This idea is particularly strong in project management, and nearly all advice on how to maintain ‘control’ of projects and operations is based on comparing actual results with expectations or targets which reflect the original view rather than the latest and most informed view. This is true regardless of whether or not there is some contract or agreement in place that gives special weight to goals agreed at a particular point in the past.

In recent years, dissent and dissatisfaction with fixed goals has grown. Nearly everyone dislikes their budget process and some companies have already rejected budgeting altogether while others place increasing reliance on rolling forecasts. In the face of consistent failure, ‘performance management systems’ (i.e. the practice of getting individuals to set goals annually and be judged by them) have also come under fire.

At the same time, ‘risk management’ has become increasingly important in many fields and a new view of it is just emerging in which uncertainty is replacing risk as the focus of management. Whereas risk management has tended to be seen as a way to achieve your original objectives come what may, uncertainty management includes managing events that turn out unexpectedly favourably, and it's obvious that in these situations you want to change your goals to take advantage of new opportunities presented. So there are signs of the beginning of a cultural shift towards Dynamic Management, in principle.

Dynamic management is a natural evolution of what is normally called ‘risk management’. The progression from assuming a stable, known world to Dynamic Management goes something like this:

Management in a predictable world: We know what's going to happen in future and lay our plans accordingly.

Risk Management: We have goals that do not change and a plan, but accept that things might go unexpectedly wrong so we have to manage those risks by making them less likely and reducing the impact if they occur. We assume we can easily determine everything about the future except for whether certain outcomes will occur or not, and even there we know the probabilities of these risk events.

Uncertainty Management: We have goals that do not change and a plan but accept that some things might happen that are different from our expectations. Some of those unexpected outcomes are worse than our expectation but some are better (i.e. they are opportunities). Furthermore, we often have uncertainty that could be reduced by more research but this could be costly. Managing uncertainty involves reducing negative impacts, increasing positive impacts, altering the odds to make good things more likely and bad things less, and doing research or monitoring to reduce uncertainty.

Dynamic Management: The same as for Uncertainty Management except that our goals are themselves something about which there is some uncertainty. They may be vague now and change in the future.

The main advantages of Dynamic Management

Uncertainty exists, including uncertainty about goals, in all but the most trivial ventures. The question is whether it is better to ignore it, or to deliberately manage it. Personally, I'd rather expect the unexpected than be shocked by it, particularly when it comes to having the goal posts moved. The main advantages of Dynamic Management are:

Better decisions: A more accurate view of the future (i.e. correctly reflecting all forms of uncertainty faced) should lead to better decisions than a distorted view. Ultimately, the true contribution of activities to long term success should be greater if we are always guiding action using the best, most informed view of what we need to achieve. This should be more effective than driving towards goals that are up to a year out of date.

Reduced paralysis: If you feel you can't begin a project without clear, agreed goals you can rely on then the chances are you can't begin a project. The reality of life is that some degree of muddle, politics, and turmoil is always there. That's not to say that establishing what you can about the goals at the outset is not valuable; it is very valuable. Paralysis can also result from finding that events unfold differently from the plan and no contingencies have been considered. Dynamic Management reduces both these forms of paralysis, improving results and reducing stress.

Reduced agency problems: ‘Agency Theory’ is a body of theory about what happens when we (the Principal) pay someone else (our Agent) to do something for us. A common problem is where we can measure some of the results of the person's work, but not the true contribution of what they are doing. For example, we might be able to measure a sales representative's gross sales, but not the profit made on them, long term. This leads to dysfunctional behaviour. For example, the sales representative may sign up business at too low a price, by telling lies that will later destroy the business. The Agent has to balance the reward from the incentive payment against the reward from long term success of the business. The usual assumption is that the basis of the reward is fixed but if, instead, it is likely to change, and to change so that it better reflects the true contribution of the Agent, the Agent's desire to perform the dysfunctional behaviour driven by the current incentive scheme is reduced.

Less wasted management time: One reason for wasted management time is the difference between planned actions and actual behaviour. As the difference between original plans and goals and actual requirements grows, as it normally does, the discrepancy between plans and behaviour also grows. Managers spend more and more time trying to explain the difference between actual behaviours and outcomes and those of the original (out dated and increasingly irrelevant) plan. This is wasted time. Dynamic Management adjusts more quickly to actual requirements as they emerge, thus reducing the discrepancy between plans and behaviours, and so reducing wasted management time.

The biggest cost of agency can be the consequences of incentivising people to pursue out dated targets, such as when company directors are paid huge bonuses for achieving targets long after the targets are known to have been a bad idea.

I can't find any empirical research that specifically assesses the effects of attempting to practice management methods that assume fixed goals in conditions where goals do or should change. Therefore I can only speculate based on personal experiences as an employee in various organisations.

I think that most of the time people don't follow the management methods and principles they say they follow. I think we actually do spend quite a lot of time trying to second guess how objectives given to us might change, though we might not do this very efficiently or systematically.

However, at times when we are disappointed by the results we are getting someone will usually suggest it's time to get things ‘under control’ and manage ‘properly’, by which they mean against the original goals. The more energetically and systematically this is done the worse the results will become, unless there are some really good things happening elsewhere to offset the effect of this ineffective management method. It would be much more effective to practice Dynamic Management, knowingly and systematically, when better results are desired.

Two famous pieces of management research lend a little support to this.

A famous study of manager behaviour is Henry Mintzberg's comparison of actual behaviour with the theoretical notion of the scientific, systematic manager. Mintzberg found managers more interested in the latest gossip than in formal management reports from the company's information systems. They made a myriad of decisions every day and their direction gradually changed over time, rather than making big strategic decisions occasionally and then systematically rolling out the implications in detail.

Another famous study is the research at the end of the 1950s by Charles Kepner and Benjamin Tregoe. They observed managers actually doing their jobs and concluded that there were three basic mental activities that occupied most of their thinking time: problem analysis, decision analysis, and potential problem analysis (which is a form of risk management). Problem analysis includes comparisons of behaviour expected or desired with actual behaviour, but all their examples are for things like problems with manufacturing machinery, where what should be happening is much clearer and with no uncertainty, so this is not comparable with control against a plan/budget/forecast. Also, the decision analysis technique they came to recommend recognises that there may be many objectives to meet simultaneously and resembles the technique I suggest later. Finally, in recent years Kepner-Tregoe has renamed ‘Potential Problem Analysis’ as ‘Potential Problem and Opportunity Analysis’, to recognise that things can turn out unexpectedly better as well as worse.

Key concepts of Dynamic Management

Dynamic Management uses some simple concepts:

Goal systems: A goal system is a combination of an objective function and a set of goals. There is also an assumed overall aim of maximising the value of the objective function in any decision making or planning. Here are explanations of these ideas.

World models: Our ideas about what will happen in future and how our actions can affect that depend on information we acquire about what the situation is now, and on models (usually very informal and even intuitive) about how the world works. These models are very often in the form of cause and effect networks, and these are often the inspiration for our networks of goals.

Evaluation and time: The value of a plan is dependent on two times. Firstly, the time up to which costs and benefits are considered sunk. Normally, this is chosen to be ‘now’ but there are exceptions and of course ‘now’ is changing all the time. Secondly, the evaluation needs to use the objective function, world view, forecasts, and so on that we held or will hold at a particular point in time. These two times could be called the Present Time and the View Time respectively.

Uncertainty and risk: ‘Risk’ suggests bad things, i.e. hazards, whereas uncertainty does not have that connotation. More subtly, risk in formal models implies a situation in which we know everything relevant about some future event except for some residual doubt that we simply cannot eliminate by further research and therefore model using the concepts of randomness and probabilities. Uncertainty includes situations like this, but also situations where we could reduce the uncertainty by more research i.e. getting more information and doing more thinking. Uncertainty is a much more useful term and the one used in Dynamic Management.

Monitoring and updating on news – not dates: Most advice on how to exercise control over a project or operation suggests regular reviews, in order to allow regular comparison with the plan/budget. Dynamic Management is different because monitoring is done to update views and so it is done on receipt of significant news. A key technique in Dynamic Management is monitoring for new information (which might be done continuously, frequently and regularly, or at variable frequency depending on circumstances) and responding by reviewing thinking immediately on receipt of news rather than at some regular interval. (Where there is a risk of failing to update frequently enough one could set a maximum interval between update meetings.)

Stable updating: Revising objective functions, goals, plans, forecasts etc frequently based on all information acquired to date could lead to unstable goals if done badly. This is because of a well known weakness in human reasoning, which is the failure to combine new evidence with old evidence. Our tendency is to forget existing evidence and be influenced entirely by the new evidence, if we believe it. There are also some situations where ‘feed forward’ is unstable, leading to oscillating behaviour that amplifies out of control. (Incidentally, feed back can also lead to oscillating behaviour that amplifies out of control.) This is not common in practice but both forms of error need to be understood and avoided. We want to minimise the shock of changing goal systems and forecasts.

Effective responses: No management process of analysis is useful if there are no effective responses you can take to the situation analysed. Fortunately, there is an armory of powerful techniques available for managing uncertainty, including uncertainty about goals. These range from simple thought processes jotted on the back of an old envelope to sophisticated computer modeling and decision making techniques.

In some way – perhaps not very precisely – we need to be able to put a value on alternative future outcomes at future times so that we can choose the ones we prefer. A mathematical phrase for a rule that does this is ‘objective function’. An objective function maps future sequences of states of the world to the value they have for us, in utility terms. Objective functions have various inputs from which to derive an overall utility value. The inputs can be called the dimensions of the objective function.

The assumed aim is to maximise the utility we get.

Applying our objective function (which could well be no more than a judgment) helps us identify particular sets of states that look highly desirable to us and which we may choose to take as goals. Goals (also known as objectives, targets, ‘a vision’, and so on) are a mental device for creating and communicating plans. A goal statement expresses a constraint on future events, such as ‘Achieve a turnover of £10m by the end of the year’ or ‘Have higher sales than our competitors.’ There are a number of ways to use goals in thinking, but typically we identify a goal that looks worth pursuing and which we think might be achieved easily enough, then try to think of a way to achieve it. For a given objective function, we might easily find another set of goals and also the objective function itself might change leading us to prefer new goals.

| Objective function dimensions | Goals |

|---|---|

Return on total assets |

Return on total assets of 15% |

Cash inflow in Q4 |

Cash inflow in Q4 greater than in Q3 |

Customer trials of the new product in the first two months |

Customer trials of the new product of at least 2,000,000 in the first two months |

When, if at all, the project is completed |

Project completion by the end of Q4 |

Whether or not the customer agrees to a pilot study at the meeting tomorrow |

Customer agrees to a pilot study at the meeting tomorrow |

Whether or not we run advertising on TV for the new product |

Run advertising on TV for the new product |

Often the way we naturally word dimensions of objective functions also shows which levels of the dimension we prefer, and so look very similar, or even identical, to goals. For example, ‘Minimise publicity’ might mean that (1) the amount of publicity is a dimension of the objective function, (2) less is more desirable than more, but (3) we haven't actually picked a particular constraint on the amount of publicity. Hence, this is clearly part of the objective function and not a goal. Other examples are less clear with the objective function dimension worded so that it is a candidate goal as well.

Goals normally form a network because we believe (based on our theories about how the world works) that achieving some goals is necessary or helpful in achieving some other goals. This cascades down into goals like ‘Write specification document by the end of next week.’ so plans are just the ‘bottom’ portion of our goal systems. (Dynamic Management expects change to all levels of goal, and to the objective function, not just the lower level.)

In many management systems definitions are given to try to distinguish between different ‘levels’ of goals and the word ‘goal’ may actually be reserved for a particular level. For example, there might be definitions for ‘mission’, ‘vision’, ‘values’, ‘goals’, ‘objectives’, ‘targets’, ‘tasks’, and ‘actions’. It is also common to try to distinguish ‘what’ from ‘how’. In practice it is difficult to make these distinctions work and also difficult to form the various types of goal into strict hierarchies. That is why I suggest calling everything a goal and modeling the system of goals as a network in which we recognise causal links between goals. It is simpler and more general. Furthermore, since Dynamic Management holds that all goals are subject to uncertainty and revision there is no need to distinguish between different goals and set some aside as fixed.

Example: A product launch. Imagine you are on a project to launch a new product into a consumer market. The product is a fizzy drink in a can. It's sticky, sweet, unhealthy, and tastes nasty but initial market research suggests it has a big future. The question is how to launch it for maximum impact and profit. An initial workshop identifies and values 9 things which would be valuable about the outcome of the launch, and 23 things that would also be valuable and which would tend to cause the desired effects on the original 9 dimensions. For example, ‘strong advertising’ is likely to be a causal factor for ‘high initial trial’ and ‘high brand awareness’. 18 of the 23 are, in effect, elements that could be expanded into items included in a launch plan, such as retailer promotions and poster advertising. The thinking so far has clarified the objective function i.e. how you will value future outcomes.

The next stage is to come up with a list of elements for the launch plan, which will of course be more limited than the items in the objective function, but guided by them. The more valuable ideas in the objective function have more chance of having a counterpart in the launch plan. The launch plan is, in effect, part of the set of goals for the launch.

You may think these sound more like actions than goals, but the items on your launch plan do not have detail behind them, so they are no more than goals to be satisfied by more detailed planning. The other goals might be to reach certain levels on other items within the objective function, such as achieving unprompted brand awareness in 12% or more of the 12 - 16 age group in the South West by the end of June.

Typically, the objective function is more important than the goals.

Dynamic Management seeks to keep the Present Time and View Time at ‘now’ as much as possible.

Skill at Dynamic Management lies in applying general principles more and more effectively in particular situations and fields where you manage. It isn't possible to work out everything from first principles because things happen too quickly. You need to build up familiarity with the issues and techniques applicable in particular fields and types of situation.

A lot has already been learned about uncertainty management in medicine, finance, and large construction and IT projects. There are also problem situations that come up in different fields but have very similar characteristics.

Example: Boy meets girl. The boy meets girl situation has a lot in common with other situations where persuasion is important. Let's imagine boy and girl meet and take a fancy to each other, but fear humiliating rejection and so try not to show it – at least not too obviously. Each faces uncertainty about the feelings of the other, and tries to resolve that by probing for information without giving too much away. Both try to avoid provoking a decision from the other until they are sure they have made a good impression and the decision will go in their favour. ‘Will you marry me?’ asked too early is bound to get ‘No’ in response and the refusal may be difficult to recover from. On the other hand, wait a little longer, live with the uncertainty a bit longer, and the result is more likely to be ‘Yes’.

Example: Mental acceleration. Certain projects that involve difficult problem solving often follow a particular pattern. At the start, progress is slower than expected with people seeming to waste time on dead ends. As time begins to run out progress starts to accelerate as people learn more about the problem and possible solutions. In the last few percent of the project time a vast amount is resolved and completed because people have learned from their earlier mistakes and the confusion has cleared. What makes these projects stressful is that this doesn't always happen. It is important to ensure that people really are learning effectively in the earlier stages if acceleration is to happen later.

Four examples of Dynamic Management

Examples of recognised management methods that resemble Dynamic Management are surprisingly rare. However, I have found four examples, most of which happen to be from the world of IT.

Active Benefits Realisation

For many years a controversy has raged between those who believe computers have been a great benefit to the world and to businesses and those who point to the actual statistics which usually show none. Faced with the problem that an IT project is as likely to have a negative effect on an organisation as a positive effect it was only a matter of time before someone coined the phrase ‘benefits management’ and offered it to the world (for a price) as the answer. ‘You want more benefits from your IT? Then you need Benefits Management.’

The obvious approach to this was to set goals, measures, targets, and so on at the outset, monitor actual results, and ‘manage’ them to somehow force into being the benefits wished for at the start. Case studies of actual IT projects consistently show that this is rarely fulfilled other than by sheer fluke. More recently, a survey of IT practices in Australia by Chad Lin and Graham Pervan showed that 83% of their respondents did not think it was possible to anticipate all potential benefits at the project approval stage. The fact is that the benefits emerge over time. (Note that it is these benefits that should be the goals of the project, though this is rarely how people see it.)

In response to this problem of evolving benefits, Dr Dan Remenyi and a colleague have proposed what they call ‘Active Benefits Realisation’ in which representatives of all stakeholders confer repeatedly during the project to update their ideas about the benefits of the project as learning proceeds, as well as perform formative evaluations (i.e. evaluations of potential benefits that help to shape the project).

Dynamic Systems Development Method

In the 1980s many software developers realised that many projects were failures because the system delivered was no longer the system that was actually needed, even if it met the original requirements perfectly. ‘Prototyping’ became fashionable as a way to show customers/users what they were going to get at an early stage of development so that their reactions and realisations about their requirements could be captured and incorporated.

It was also realised that doing a series of incremental developments, each of which provided something useful, even if it was not the full and final answer, was more useful and less risky than a single, longer development project.

The ‘Dynamic Systems Development Method’ (DSDM) is a written down method for doing this kind of development.

The main objective of the method is different from the ‘waterfall’ approach more common at the time. Instead of attempting to deliver a system that meets the original, given requirements, DSDM aims to deliver a system that meets the actual requirements at the time the system comes into operation.

Dramatic improvements in productivity and success rate are claimed for DSDM (which is promoted by a non-profit organisation), though these are not available in all types of project as DSDM concentrates on systems where the user interface is important.

Evolutionary Development/Project Management

An approach to project management that is very similar to DSDM in its principles but has been used on some much bigger projects and for longer is called Evolutionary Project Management. According to Tom Gilb, Hewlett-Packard has used it in at least eight divisions since 1985, with the main benefit being the ability to get early, well-informed feedback from users at an early stage and respond to it. Other users include NASA, Loral, Lockheed Martin, and IBM Federal Systems Division.

The idea is to deliver project results early, through delivering frequent, useful increments – typically 50 micro-projects, each representing about 2% of what a traditional project would be. The aim is to deliver the most useful increments first, where possible.

However, this is not the same as Incremental Delivery, which means delivering small slices of the original requirements. Evolutionary Delivery allows for requirements to change as a result of changes and discoveries during the project. Detailed plans are drawn up for the next increment only, but there are still outline plans and architectures for other increments (even though these will probably change).

According to Gilb, the main difficulty for organisations adopting this is getting used to thinking of incremental ways to deliver. However, once people get used to thinking about the value their ‘customer’ might get from the project they can see how deliveries other than what might have been asked for initially would be useful. Gilb gives a number of guidelines for identifying suitable increments, including:

Look for things that will be useful to the customer in some way, however small.

Do not focus on the design ideas themselves, especially when thinking of the first, small increments.

Do not be afraid to use temporary ‘scaffolding’ designs, as they can still deliver value and provide practical learning opportunities.

If you help your customer in practice, now, where they need it you will be forgiven many mistakes.

Getting practical experience helps you understand things better.

Do early deliveries to cooperative, mature, local parts of the organisation.

When something is going to take a long time, whatever you do, start it early and do other things while you wait to keep up the momentum of useful deliveries.

If you are developing something to work with a new system when it is delivered, consider if you can deliver the same thing earlier to help with the old system and learn before the new system comes along.

Try to negotiate ‘pay as you really use’ contracts for things you don't want to commit to buying outright or with uncertain demand.

Talk to real customers or end users as they are a good source of ideas for things that can be delivered as increments.

In those rare cases where tiny increments cannot be found, Evolutionary Management resorts to the more familiar risk management techniques of insurance, contracting risk to others, sticking with established technology, and so on.

Dynamic Strategic Planning

Another method that has been applied on large scale engineering projects is Dynamic Strategic Planning, as described by Professor Richard de Neufville of MIT. This is long term planning that recognises the difficulties of forecasting and tries to build in flexibility in the plan, and adjust the plan according to events that occur. It is like playing chess, in that the planner thinks many moves ahead, but only commits to one at a time, and adjusts the game plan to events as they unfold.

An important aspect of Dynamic Strategic Planning is the attention paid to the interests and powers of major stakeholders, though this does not appear to be explicitly linked with the problem of anticipating possible changes to goals as the project unfolds.

How to manage Dynamically

I have defined Dynamic Management as management that expects goals to change and given the basic concepts. Within this framework there are many ways one could go about actually managing dynamically so the following proposals are just one version – a version that will surely change in future years.

The demands of Dynamic Management create some important general constraints on any techniques used:

Speed: If every change to objectives required an off-site meeting for executives complete with Powerpoint slides, group work, and flip charts then Dynamic Management would not be practical. If all financial forecasting and planning took as long as an annual budget round then Dynamic Management would be out of the question. Fortunately, these wasteful techniques are not needed.

Coordination: When Dynamic Management is applied in a team or collection of teams it is not a mandate for unconstrained, uncoordinated improvisation. On the contrary, as change is so frequent it needs to be handled smoothly and systematically, as a routine matter, and involving all and only the required people for each change.

More specifically, Dynamic Management requires the following:

Better, quicker understanding of goal systems.

Faster uncertainty management extended to include goal systems.

Faster teamwork that gets more people involved in rethinking.

Incentives that point in the right direction.

Building flexibility into contracts.

Planning and forecasting.

Management information focussed on learning and rethinking.

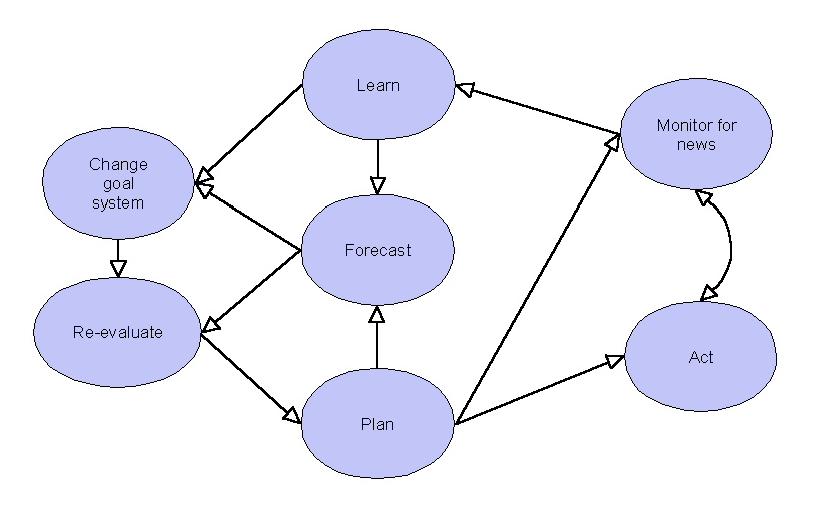

A new management process.

Easy adaptation to different domains and scales of organisation.

Creating pockets of Dynamic Management in static organisations.

Techniques to achieve all of these are described in the following sections.

Better, quicker understanding of goal systems

In one sense, the ultimate goal is unchanging - maximise utility. However, this on its own is no guide to action and we have to think about how future outcomes have value for us, and try out specific goals that might be worth planning to achieve. In practice, our objective functions and goals look more like shopping lists than mathematical expressions. Why?

Multiple dimensions of value

The objective function is a function that places a value on an imagined future. This is not the state at a point in future time but rather the value of an entire future path.

A common approach to evaluating the value of some proposed business venture is to convert its impact into cash flows and calculate a single monetary value using Discounted Cash Flow techniques, ideally using a risk adjusted rate of return reflecting the company's weighted average cost of capital. This is called the Net Present Value, and often considered to be the same as the Shareholder Value created by the venture. Impressive as this usually appears it has very serious drawbacks, of which the most serious have been pointed out by Igor Ansoff in his classic book, ‘Corporate Strategy’.

Looking ahead to the short term, identifying cash flows usually looks tough but possible. However, the further ahead you look the more difficult it is to convert every impact into a cash flow. Many important positive and negative effects of a strategy, such as a strengthened balance sheet, or increased intellectual capital, do not convert readily into cash flows. The uncertainty is simply too great.

Ansoff suggests a different approach which is also much more practical, being quicker and more robust, and better at suggesting specific actions than ‘maximise shareholder value’. Ansoff suggests a system of points for valuing various outcomes, including things that do not convert to cash flows. This may be used in combination with the Net Present Value of relatively short term and predictable cash flows to provide an overall score for desirability. This is, in effect, a heuristic scoring method similar in principle to those used in chess playing computer programs.

While the specific set of measures suggested by Ansoff may not be useful in every case, the simple idea of listing all the desirable aspects of future outcomes that would influence the choice of strategy, and giving some indication of their relative importance, is easy and useful. It can be used easily in the vast number of situations where quantification is not worthwhile.

With a bit more work one can start to structure the objectives to show how they are linked causally. One can also think about the relationship between degrees on each dimension and the actual value. For example, for some things one either hits the target and gets the value, or doesn't and gets nothing. More often, the utility of an outcome varies more smoothly. For example, typically, reducing costs a certain amount is more desirable than reducing them a little less than that, for all degrees of cost reduction, other things being equal.

The next step is to select a set of goals, which usually means specific values for aspects of the future situations.

Example: A better garden – part 1. What is a ‘better’ garden? Imagine you're just starting out on the property ladder but lucky enough to be able to afford a property with a garden. Your first attempt to write down something about your objective function might look like this:

Attractive: Neat, segregated, green or blooming all year round.

Low maintenance: Low maintenance plants (daisy, lavender, etc), weed suppressing mulches for all beds, easy-trim lawn edges.

Imagine you don't define a formal objective function, but your judgment is that a very messy garden is unacceptable but there's no need to create perfection. Similarly, low maintenance is important but you are willing to make some small concessions if that makes a significantly more attractive garden. Planting annuals each year is too much effort, but there are other smaller efforts you are willing to make.

As for goals, you might decide to replace certain plants at a particular time of year, redesign the edges of the lawn in spring, and put down weed suppressing plastic sheeting under bark chips. All these things have value you to you, as the objective function shows. However, on investigating further you decide to change your goals to replace the bark chips with gravel for certain parts of the garden near the house.

A better garden – part 2: Later, you learn more about the environmental impact of gardens and, being concerned for our environment generally, you decide you would like to change your objective function as follows:

Attractive: Neat, segregated, green or blooming all year round.

Low maintenance: Low maintenance plants (daisy, lavender, etc), weed suppressing mulches for all beds, easy-trim lawn edges.

Environmentally low impact/restorative: Containing some habitats for wild creatures, not using chemicals, composting.

Again, your objective function rules out certain things as unacceptably damaging to the environment, but also makes some concessions to attractiveness and easy maintenance.

A better garden – part 3: Suddenly everything changes as you discover you are to become a parent. Now the objective function changes a lot:

Attractive: Neat, segregated, green or blooming all year round.

Low maintenance: Low maintenance plants (daisy, lavender, etc), weed suppressing mulches for all beds, easy-trim lawn edges.

Environmentally low impact/restorative: Containing some habitats for wild creatures, not using chemicals, composting.

Safe: No poisonous species. Safe furniture. No big steps.

Fun: Toys and toy storage are needed.

Robust: Football proof species. Perhaps more paving. A delineated play area.

Easy to create: Minimum new landscaping, paving, etc.

The weighting that feeds into the objective function now gives a high priority to safety and low maintenance, at the expense of attractiveness.

Explicit objective functions and quantification

There are two strong reasons for making objective functions more explicit and trying to quantify them. Firstly, humans are very bad at judging the value of combinations – much worse than most people think. Secondly, quantification is much easier than you might think, as the following techniques will show. The quantification might just be putting numbers on subjective feelings. You don't always need evidence and often can't get it anyway.

Example: Negotiations. The value of quantifying objective functions (even subjective ones) in a negotiation has been shown by Howard Raiffa in a series of simulated negotiations. The scenario was a negotiation between a city council and a union over 11 issues including pay, hours, vacations, and the fate of a hated council official. A large number of pairs of people played the roles of union and city negotiators in various conditions. Some were given confidential instructions that showed their valuation of various outcomes on each issue using a points system. Others had similar confidential instructions but the numbers were replaced by words and phrases intended to convey about the same values. Negotiations where both negotiators lacked numbers were highly variable in outcome and the most inefficient in the sense that joint gains were left on the table. Both teams were better off with numbers regardless of what the other team were using.

Making our objective functions more explicit is a fascinating subject and there are a number of useful techniques. Multi-Attribute Utility Theory (MAU) is the name for models that use ‘utility’ as a kind of common currency for human value. The idea is that the utility of some object or future situation depends on more than one attribute.

‘Conjoint analysis’ is the name for a group of techniques for making MAU models of the way people value different attributes of things. It arose from mathematical psychology and is most often used in market research to find out what combination of features is most attractive to potential customers.

The practical attraction is that with some simple software it is possible to find out how a person values different aspects of the future or of things, and so create an approximate model of their objective function in just a few minutes. This can be done for an individual, or for a group of individuals. If a leader wanted to know what people thought they should be aiming for in the organisation this is a simple and unusually precise way to do it.

Example: Project trade offs. A rather generic way to assess a person's priorities in a project is to use three attributes of the project's outcome: cost, completion date, and quality of result. A number of levels for each of these must be defined specific to the project. The conjoint analysis program then asks people, individually, questions about which trade offs they prefer. For example, ‘What is your preference between a plan that means finishing the project in December for a cost of £2m, and finishing in October for £2.5m, all other things being equal?’ Differences between individual views will quickly become apparent.

To apply conjoint analysis you have to define the object or situation in terms of a number of attributes, each of which has two or more possible levels. (If an attribute varies continuously so that there are infinitely many levels it can take it is still possible to choose a small sample of these and interpolate approximately for levels in between.) The software then poses a series of questions about how much you prefer some combinations of attribute levels to others, and uses your answers to build up a multi-attribute utility function.

In the simplest models each attribute level has a utility worked out for it, and the total utility of a situation is simply the sum of the utilities attached to each attribute value. (In other models the value of an attribute level depends on the levels of other attributes, but these require asking more questions and are rarely much more accurate so are less commonly used.)

The model can even give an idea of the relative importance of different attributes. However, this is for a given set of possible levels for each attribute and meaningless otherwise. For example, if the difference in utility between the best and worst levels of attribute A is 10 and the difference between best and worst for attribute B is 5, then A is twice as important as B for the attribute levels considered.

So, in selecting levels for attributes in your testing it is a good idea to choose them to span the full feasible range for every attribute so that the relative importance of attributes is not misleading.

Putting numbers into a simple formula improves decision making. Humans have so much difficulty combining several factors together in any decision that even apparently crude models perform better, e.g. a simple linear model such as: overall score = L1 × w1 + L2 × w2 + L3 × w3, with three attribute levels (L1, L2, L3) multiplied by three weights (w1, w2, w3). Numerous experiments have shown that making probability judgments (a similar task involving combining factors) is better done with a linear function than with unaided judgment. If the level values are ‘normalised’ so that they have the same distribution as each other even choosing the weights at random gives a judgment more accurate and consistent than un-aided human judgment. Conjoint analysis means we can assign meaningful weights that mimic human judgements, but perform more consistently.

Faster uncertainty management extended to include goal systems

The techniques of most value are those that allow us to make powerful inferences about the actions we should take, quickly and easily, from limited and poor quality information. They should help us pick out the relevant information from a confused situation, quickly and reliably, and allow us to make the main decisions about future action with ease.

With the ability to get close to good answers quickly, it only remains to add more sophisticated refinements where necessary for precision in big decisions.

Inferences from degrees of uncertainty

A basic technique is to list out areas of relevant uncertainty (or update your existing list), consider the amount of uncertainty faced, and consider the extent to which you could reduce that uncertainty and how you would do it.

Knowing the common reasons for uncertainty can make it easier to spot:

Novelty – particularly where new conditions are different from conditions that have been unchanging for some time and which you have become accustomed to. It can be difficult to identify and review all the ways in which assumptions about that factor have become embedded in your current behaviour so errors are common.

Complexity – particularly when the complexity is from numerous, interconnected elements interacting.

Speed – because if you have less time to gather information and think you will have to guess more.

Intangibles – especially psychological things. Predicting human behaviour is extremely difficult.

First cut actions flow as follows:

If uncertainty is high for a relevant area and it would be easy to reduce it by research, decide to do the research, though not if that research could influence actual outcomes adversely (e.g. don't ask a competitor if they intend to block your next strategic move; it could prompt them to do so).

If uncertainty is high for a relevant area and we will probably get useful new information if we monitor and wait for it, decide to monitor. (This decision is independent of the research decision.)

If uncertainty will remain high whatever we do, choose one of the robust patterns of action (see below) such as multiple-stages, portfolios, reusable blocks, or cheap commitments (though you would probably want to do so anyway). Do not let someone else push risk onto you unless you have to.

If this leads to too many actions or to actions whose cost-benefit is in doubt one can always prioritise the actions and decide which are worthwhile.

Example: Running conferences. Imagine you have a job in the Conferences department of a thriving society for people who study lichens. Your role is to gather ideas for conferences, arrange them, sell them, run them, and report on the results obtained. You've been doing the job for a year and it's turned out to be a lot more difficult than you expected. On top of all the stress of getting everything ready for each conference there is the problem of deciding whether to go ahead with a conference idea. It all depends on how many people attend, which is proving very difficult to predict and nobody in your department seems to have more than a vague idea, even though they still seem confident they can predict demand and always act surprised when things turn out differently from their expectation.

One of the most awkward situations is to have to abandon a conference because of poor take up after it has been advertised and some people have signed up. This has happened three times in your first year and it is more costly than you expected because the venue is usually paid for in advance and only a small part of the fee is refundable.

Thinking about the uncertainty you face it is obvious that it is mainly uncertainty about demand. This is generated by the intangible nature of the interests of the scientists who might come, and is worse for conferences that are on unusual topics not previously explored. By contrast, the annual conference on Scandinavian tree lichens has been running for 12 years and attendance is pretty steady.

However, this uncertainty can be reduced by appropriate research. You decide to try a survey using a market research method called ‘conjoint analysis’ at the next big conference and also on the society's web site to find out what the members value in a conference (including rating specific topics), and exactly how much. For example, what is the impact of the venue, the time of the year, the narrowness/breadth of the topic area, the style of the presentations, and the reputation of the main speakers? Using the analysis will give you a much better chance of proposing conferences with a good chance of at least breaking even.

The uncertainty can also be reduced by waiting more time to see how many people actually do sign up. However, the problem of non-refundable venue fees has to be reduced at least. You survey suitable conference venues to find out their cancellation fees and discover that some will refund closer to the date of the conference than others. By favouring these venues for novel conferences, encouraging members to sign up early, and monitoring the growth of committed attendees you can avoid the venue fee problem much more often than in the past.

Inferences from specific uncertainties

Another basic technique is to list out specific uncertainties in a relevant area (or update your existing list) and consider the extent to which you could reduce that uncertainty, influence the probabilities involved, or change the impact of alternative outcomes.

Decisions about how to reduce the amount of uncertainty by research and monitoring flow as previously described. For influencing probabilities and impacts, first cut actions flow as follows:

If you see a way of reducing the negative impact and/or increasing the positive impact of a possible outcome, note it down as it might be useful. (Basic techniques include sharing the impact with someone else, having a contingency plan and any necessary resources in place, having some fallback in place, getting insurance, creating a buffer or insulation, reducing a commitment, weakening the causal links between the outcome and the negative consequences, and giving yourself more than one chance.)

Having considered ways to modify the impact of outcomes, do you see any ways to make outcomes that are, overall, positive in their impact more likely? Do you see how to make outcomes that are, overall, negative in their impact less likely? If so, note them down. (Basic techniques include weakening/strengthening the causal link between drivers of the outcome and the outcome itself, putting your best people on the most important uncertainties, altering things that are drivers of the probabilities, and avoiding combinations of hazards at the same time by changing the timing of events.)

Do any of the actions noted down look worthwhile? If so, decide to do them.

Once again, having quickly roughed out some actions it may be worthwhile going into more detail to get precision or a more comprehensive and knowledgeable analysis by using a more sophisticated approach as well. There are lots of different styles to choose from but actual practice tends to be let down by a range of reasoning errors. My paper on ‘Straight and crooked thinking about uncertainty‘ mentions several.

Considering the uncertainty around objectives

Having identified a goal system it is helpful to have a quick look to see which elements have most uncertainty attached, and which are most likely to change in future. Knowing that a particular element is likely to change, you can make it easier to handle those changes should they arrive.

Often, the goals are the result of a customer's requirements. The customer might be internal or external to the organisation concerned. To identify uncertainty around these you can talk to the customer, and also consider the conditions they face. This helps identify potential changes of requirements – perhaps even before the customer recognises them.

Using robust project patterns

Projects can be designed in various ways and it helps to use one or more of the robust patterns in your designs. The main robust patterns are:

Multiple-stage projects where each stage is valuable in itself, with opt outs: In this pattern there are two helpful things going on. Firstly, each project stage is designed so that it is worthwhile in itself and would be desirable even if it was decided not to go on with further stages. Secondly, there is the option to quit after each stage, and do no more stages. Both contribute to average returns, and improve them provided excessive sacrifices were not made to design the project in that way.

Portfolios: The portfolio pattern means putting your eggs in several baskets, instead of just one. This is the normal approach with investing in company shares, but also applies to research projects, new products, new systems, and so on. The idea is often to try lots of things and monitor closely for signs of successes that can then be pushed harder, while failures are pruned away. The benefit of diversification in this way is greatest if the performance of the individual investments is not correlated. Sometimes people standardise something in an organisation for perceived efficiency benefits without allowing for the loss of variety and the implications of having reduced learning by leaving fewer experiments with which to discover success.

Reusable blocks: Building or achieving something that could be re-used in other projects or to meet other objectives is another useful pattern. Reusable blocks tend to have a more robust value across a variety of objective functions, i.e. they are more likely to stay useful even when the objective function changes. Reuse also has good environmental credentials.

Cheap commitments: Generally it is better to make low commitments if you can. Avoid up-front investment wherever possible. Delay commitments. Make your commitments low.

Pareto value sequencing: Often, you can divide the work and prioritise it so that the items of greatest value can be done first, with less important items left until later. If time or resources run out before the lesser items are done this is less damaging than if an important item is left undone. The project degrades gracefully. For example, it is often safer to create a rough but complete version of a deliverable first, then do a series of refinements, than to do half the deliverable to full refinement, then start working on the second half. Another example would be trying to get everyone in a team to learn Dynamic Management by Christmas. Not everyone's skill in Dynamic Management will be equally important, so start where Dynamic Management is most useful and move out from there.

Systematic risk reduction through variable costs: Systematic risk is risk that comes from general economic conditions and affects just about all businesses though to different extents. Some investors use a number called beta to represent how much a company's results are affected by general economic conditions. These investors consider a low beta desirable and worth paying extra for because it shows that returns from the company are steadier whatever the economic climate. One way to reduce your beta is to do what you can to keep fixed costs a low proportion of your total costs. Fixed costs are costs that do not vary with your output or sales.

Systematic risk reduction through counter cyclical activities: Another way to get a lower beta is to combine two activities that react in opposite ways to economic conditions. For example, insolvency plus services to create new companies, or selling new and used cars, or housebuilding and renovation, or taking deposits and making loans, or a recruitment agency that also does outplacement advice.

While trying lots of things to see what works is essential in many common areas and should be happening in all organisations, trial and error alone is not as efficient as trial and error plus expertise. For example, people who design user interfaces for computer systems vary in their skill. Really skillful ergonomists know that only usability tests will drive out the usability bugs in their designs because of the difficulty in predicting human responses. However, experiment has shown that the initial design by an expert ergonomist is generally better than a non-expert's design after several rounds of usability testing.

Faster teamwork that gets more people involved in rethinking

Dynamic Management is not a mandate for unconstrained, uncoordinated improvisation. It is not a mandate for undirected, haphazard experimentation. On the other hand, Dynamic Management does work much better if people from all levels and areas of an organisation can feed into decisions about goals systems, plans, uncertainty management, and so on. Uncertainty and the risk of failure are much reduced when plans are made rapidly right down to the crucial details on which performance will depend. People need to be able to see the whole picture and make their plans accordingly.

Example: Sam Walton. The man who created Wal-Mart and made himself a billionaire in the process was relentless in his search for grass-roots ideas to improve his business. Many of his clothes were bought from his own stores. He visited competitors' shops almost compulsively. On one occasion he flagged down a Wal-Mart 18 wheeler to ride for 100 miles, pumping the driver for ideas all the way. The driver was not expecting his passenger but knew, like other employees, that Sam would take his ideas seriously.

Involving team members at various levels in thinking about changes to goals and the objective function has nothing to do with achieving ‘buy in’. The reason for doing it is to maximise learning and get the best information, experience, and thinking applied to management. This contrasts with typical management thinking from the late twentieth century where a senior elite decides the goals then tries to get them supported by ‘involving’ people at lower levels to get their ‘buy in’. This has always seemed somewhat phoney to me as so much has actually been decided and will not change even if it is discussed. Often, the discussion is really negotiation over performance related pay or evaluation targets in which underlings try to argue for the easiest targets possible while their bosses demand more while trying not to seem to be imposing targets, even though eventually they do.

In Dynamic Management, the involvement of people at ‘lower’ levels in the corporate hierarchy is to get the benefit of their knowledge and take account of their interests to some extent, and will normally lead to some modifications to goals and the objective function. This is because so much depends on the operational detail of performance (particularly in contacts with ‘customers’).

In ‘Bottom Up Marketing’, advertising experts Jack Trout and Al Ries, famous for inventing the modern marketing concept of ‘positioning’, point out that so much depends on having a tactic that wins in the marketplace, and such tactics are so hard to find, that making a strategy without having one identified is likely to lead to failure. They give numerous examples of companies where a senior elite set out a vision of rising revenues and profits, handed targets down to lower levels of management for them to find tactics that would deliver those financial results, and watched while no such tactics could be found and the company lost money.

Ries and Trout liken a winning tactic to a nail, and strategy to the hammer. The purpose of the strategy is to drive home the nail as strongly as possible. But you have to have a nail, and you should make sure you have a good one before making commitments to a strategy.

(This is a general rule of uncertainty management. If there's a big uncertainty in a venture, it is usually preferable to try to resolve it early if possible rather than waiting until you have committed more resources.)

Goals should reflect what you and your organisation are capable of doing. To find out what you're capable of doing you need to get down to the detail that determines the results of trading or service delivery. Therefore, what happens at the detailed, everyday level familiar to those at the bottom of the hierarchy is vital to the goal system and must be brought into the thinking. The input of people who actually do the work of the organisation rather than just managing it must be able to influence all the goals and objective function.

Efficient involvement in changes

Getting lots of information from different parts and levels of an organisation pulled together and considered, repeatedly as conditions change, demands extremely efficient techniques for communication and decision making. If every piece of news meant getting away for an ‘off site’ with the whole organisation to debate it nothing else would get done.

There are a number of techniques that can be efficient enough provided they are done well and the appropriate techniques are chosen to suit circumstances.

Who should be involved in decisions to change goals, world models, etc? It cannot be everyone, every time as this would mean nothing could be changed quickly.

The group's leaders will have to make some decisions about who needs to agree to each change, who needs to give their views, who will just be told about the change afterwards, and who won't find out unless they take the trouble to look at the group's documentation. The group's leaders will have to make some decisions about who will talk to whom, what groups ought to meet, and so on.

Here are some techniques that can be used:

Near decomposable structures: Often an organisation can be divided into units that are ‘near decomposable’ in the sense that communication within the unit needs to be much greater than between the unit and other units. Often, it will be obvious in advance that some goals only concern an individual or sub-set of the group so wider consensus or involvement is not needed. Full advantage needs to be taken of this.

Prefabrication of solutions: Both strategy and tactics have to be in place at once and before commitments are made if the risk of finding there is no solution is to be mitigated. Furthermore, a lot of this has to be revised frequently (more than once a year). This is not possible by following sequential problem solving methodologies. However, it is done successfully by many people because they already have a vast mental store of solutions to potential problems, and they assemble these prefabricated solutions together to tackle new situations. Some simple modeling of problem spaces suggests that the benefits of tackling problems simultaneously from the top down and bottom up increase as problems grow in size. For large problems it is orders of magnitude more efficient than proceeding in one direction only.

Well connected leaders: Leaders tend to be more connected than others in an organisation and this is traditionally why they are the ones who get together to make the big decisions. However, there is a problem here because in practice people who are leaders in large organisations are still too far removed from the real work of the organisation to represent the front line. So much information is lost as it percolates up that leaders are left making abstract decisions on the basis of generalisations that are often distorted along the way by preconceptions and other human weaknesses. To overcome this leaders need to have direct contact with people at all levels.

Getting facts behind the suggestions: One problem with suggestion schemes, focus groups, and so on is that the vast majority of the points raised are bad ideas or requests for more money for less work. Ideas tend to be poorly thought out, unworkable cliches that sound bright and politically correct but lack the practical details and efficiency refinements that would give them a chance of working. Not surprisingly nothing comes of such ideas though most leaders try to look as if they are taking them seriously and may even try them at times. This is only to be expected since everyone at every level in an organisation suffers from the same problem: we all have just a part of the picture.

Detailed research presented with rich detail: Another way to bring crucial details into the board room is to commission detailed research designed to capture the crucial details and presented in a way that conveys the crucial observations in a realistic, rich, dramatic, personal way. Video tape, recordings, quotes of people speaking, anecdotes, and crucial statistics can bring home points that contradict the preconceptions of the audience and stimulate beneficial change.

Managed communication channels: Conversations in which different parts of the picture are moved and shared around an organisation can be planned and encouraged to happen. (Databases and emails can be used too but mostly this is creating a barrier to communication rather than encouraging it. Better to have a teleconference or meeting where people can ask unscripted questions and make rapid progress.) Desired flows of information can be drawn on a diagram where squares represent groups or individuals in the organisations and annotated arrows show information passed between them. The frequency, topics, and attendees of meetings to carry the information flows can easily be written on and used to explain what is proposed. For example, if sales people should be passing on their experiences to the marketing department and product development team then schedule some quick but focussed meetings to do exactly that, regularly or on some other timing basis.

Co-location: If people work close together – adjacent desks, or in a project room for example – communication is encouraged.

Representative groups of the right type of people: Sadly there is a second reason why most suggestions are poor ones, which is that only a few people are good at planning and problem solving where the solution requires new thinking. Many of the problems organisations seek to solve are long standing ones that have stubbornly resisted all obvious solutions so original thinking is normally needed. Individuals capable of original thinking can exist at any level but they are a minority. A group put together to tackle some issue or stimulate some change should be composed chiefly of these people, as far as possible, consistent with the need to get the right experiences and connections into the group.

Genuine openness to input: The number of people able to put forward good ideas can be increased by showing that it is worth putting in the effort to create them. When people feel that there is no point making any real proposals because the leading elite has already made up its mind and the ‘consultation’ is phoney then they will not make the effort to develop ideas properly. Dynamic Management means that even the goal system is up for discussion, so over time Dynamic Management means more people with well thought out ideas to put forward. In addition, if people are given more of the information that leaders have they can make better suggestions.

Building this store of solutions to likely problems takes a long time and a lot of effort and thought. This is rarely done and leaders have to convince their teams they are open to suggestions if they expect people to come forward with this sort of ability.

To bring all parts of the picture together it is not particularly helpful to just put forward suggestions. It is much more useful for everyone to hear the problem or incident that prompted the suggestion, and other related facts too. It may be that the suggestion is a poor one, but a different interpretation making use of information from someone else leads to a truly good idea. The suggestion is not even needed, though if it exists it should be put forward too.

This applies when senior managers put forward their business plans and when front line staff explain their bright ideas. The listeners should always try to get to the experiences that prompted the ideas. This improves understanding of the ideas as well as giving the possibility of producing new, better ideas from bringing together more parts of the picture.

Too many organisations resolve to ‘improve communication’ but don't actually draw out what should be communicated and between which parties.

Spreading knowledge

It is helpful if members of a team working with Dynamic Management know the goals and objective function, and know the world models being used. They also need to know about changes in the factors that drive the goal system. This helps them fill in the detail for themselves and organise and act without waiting for centrally issued commands. However, it is unrealistic to expect that everyone will know and understand all of this material. Inevitably, some people will have a much better grasp than others.

Dynamic Management tends to help with this because it requires frequent reviews of goal systems and their related thinking. By going through that thinking repeatedly group members improve their memory of it, their understanding of it, and their ability to act in accordance with it.

This contrasts with the common experience of finding that a strategic review has become dust-gathering shelfware.

Shared views?

It is also unrealistic to expect a commonly held view of what the goals etc should be. Some people in a group will believe that the goals etc in use are the right ones, but most will see them as just ‘the ones we're working with at the moment’ and disagree with some or all of them. Provided people work along with the formally adopted group position there is no harm in dissent. On the contrary, it is from the dissenters that improved thinking is likely to arise.

Personally, I dislike being in a group where holding a different view triggers strong social pressure to conform both in action and belief. In addition, I believe it inhibits thinking and slows adaptation. The technical term for this is ‘groupthink’.

Skilled uncertainty management tends to reduce disagreement – or at least makes it possible to live more harmoniously with it. Much of the difference in view between people is in their subjective estimates of the likelihood of various events. Often, the heat of an argument exaggerates the magnitude of the difference. When this is found to be the case it is often possible to agree that the future is not known by anyone and we can at least agree that the outcome is uncertain. That may be all the common ground needed to agree an appropriate action, such as monitoring the risk.

For example, suppose a plan is put forward and adopted, but soon a member of the group realises that the plan is a poor one because it is based on a bad forecast and will fail because of a factor that has not been taken into consideration. While proponents of the original plan may not accept that their plan is flawed, they might still be persuaded that there is a risk that threatens their plan, and that a sensible step can be taken to manage that risk. Taking that step may well reveal whether or not the plan was flawed and so provide the facts needed to revise it without further argument.

Empowerment

Empowering people in an organisation is also helpful in creating the ability to adapt to a changing, unpredictable, complex, even chaotic environment. This does not help directly with the problem of getting low level input into high level goals, but it does allow low level decision making to be better aligned to overall strategy.

Empowerment has been well explained by a number of authors and it is generally accepted that it involves:

Providing a framework of constraints/principles/values within which people are encouraged to make decisions themselves as if they were owners of the company.

Providing information that traditionally only leaders would have, so that everyone is able to make decisions.

Providing continued encouragement and not punishing honest mistakes, since empowerment takes time and people don't always get it or want it initially.

Good reasons

The various elements of the world view and goal system are likely to be more acceptable and memorable if they are explained with good reasons. For example:

| Without good reasons | With good reasons |

|---|---|

‘Christian values are part of our culture.’ |

‘This organisation was founded by a group of Christians five years ago, so Christian values are part of our culture.’ |

‘We aim to introduce a range of 3 to 6 new products aimed at the 5 - 9 age group this Christmas. A key objective for the design team will be to come up with something really exciting and attractive.’ |

‘Our competitors have been bringing out some very appealing products aimed at our younger customers. We think we can also do better in that area and defend our share, so we aim to introduce a range of 3 to 6 new products aimed at the 5 - 9 age group this Christmas. Obviously, a key objective for the design team will be to come up with something really exciting and attractive.’ |

‘At next month's exhibition we must make a strong impression as well as learning as much as we can about how people react to the advantages of our product.’ |

‘At next month's exhibition our stand will be right next to the market leader's. Because we're new we're likely to get a lot of interest from people who have never heard of us before. It's vital we make a strong impression as well as learning as much as we can about how people react to the advantages of our product.’ |

I hope you can see that the reasons provide a context that makes the goals more important and compelling. They convey a sense of the leader's awareness of what is going on and sensible leadership decisions. By contrast, goals without reasons just sound like hot air from optimistic managers with little original or insightful to say.

Control

Dynamic Management provides management control by incentivising people to plan and act towards the latest, best informed goals. These goals are agreed goals, not just any goals people fancy pursuing. The above techniques are designed to make it possible to bring together the thinking of lots of people quickly so that this is possible.

Incentives that point in the right direction

In much of the Western world it is now generally accepted that people work better if they have incentives, which normally means pay that depends to some extent on results. However, there are a number of ways this can go seriously wrong. The wrong incentives, strongly applied, can create innumerable problems and even block Dynamic Management.

Distorted objective functions

It is better to base incentives on the objective function than on goals. However, goals are the more usual basis. This tends to lead people to make decisions on the basis of a distorted view of the objective function as well as encouraging fraud and false accounting.

Example: Incentives for salesmen. Before becoming a billionaire by creating EDS from nothing, Ross Perot worked as a computer salesman for IBM. One year IBM introduced a commission scheme where salespeople were paid a good bonus for reaching a certain, challenging figure for total sales in a year. To get more they had to sell twice that amount (which was almost impossible) to get two bonuses. That year Perot sold IBM's top of the range system to a major customer on the first day of the year and by so doing he reached the target for a bonus. With no realistic hope of doing it again that year and no incentive to sell anything less he was disillusioned by the experience and it helped push him towards going his own way to escape the culture of IBM.